Introduction

When dealing with large file uploads, efficiency and speed are crucial. AWS S3 provides two powerful features to help with this: Multipart Upload and Transfer Acceleration. In this blog post, we'll explore how to use these features with Node.js to optimize your file upload process.

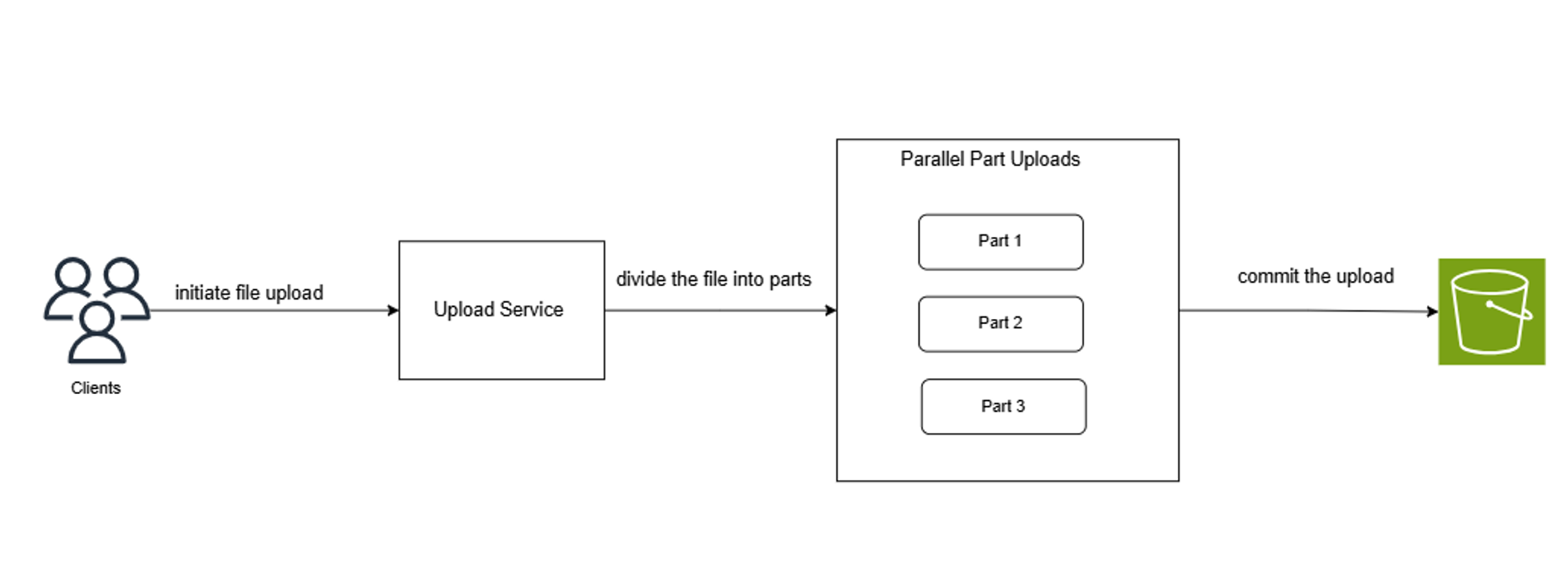

What is Multipart Upload?

Multipart upload lets you break a large file into smaller, manageable parts and upload them individually. Each part uploads independently, often in parallel, which can drastically speed up the process. If any part fails, you only need to retry that specific part, saving you from starting the entire upload over again.

You should use multipart upload when working with large files that exceed the 5 GB limit for a single upload in AWS S3. Multipart upload is ideal for files that are too large to upload in a single request or when uploads might take a long time. By splitting the file into smaller parts (each part can range from 5 MB to 5 GB), you can improve upload speed and handle failures more efficiently. In case of a failure, only the specific part needs to be retried, and you can even upload parts in parallel for better performance.

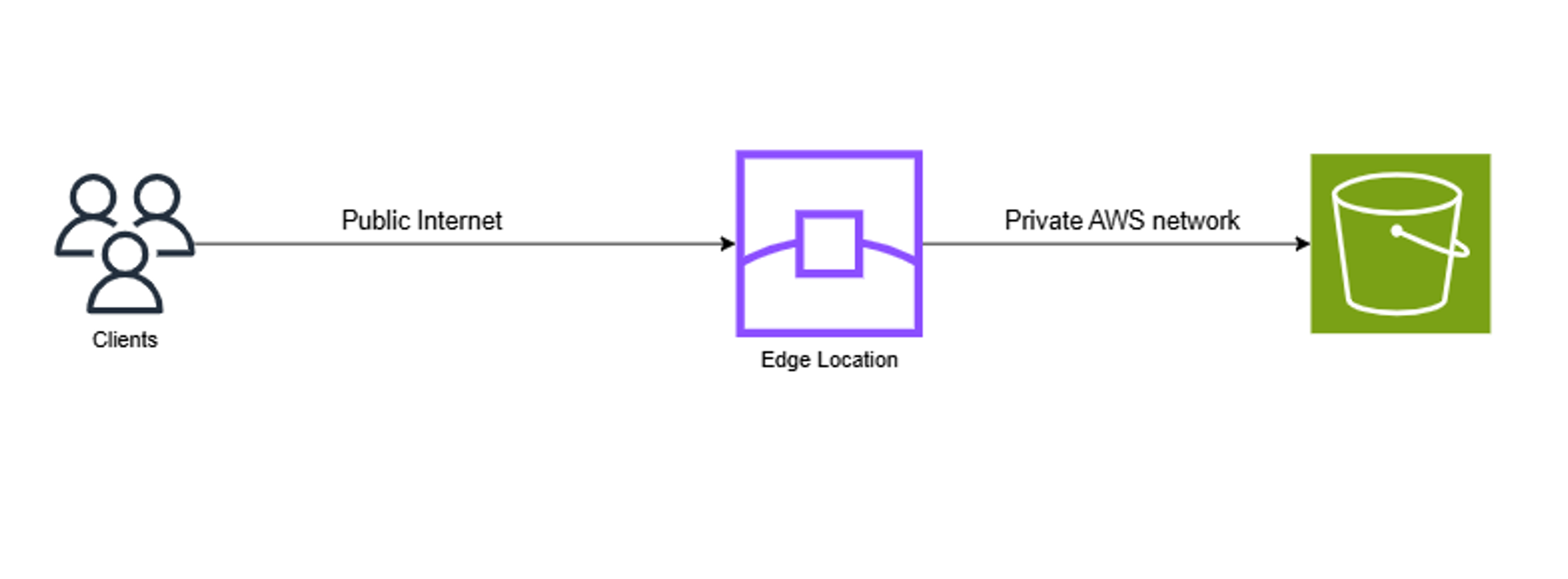

What is Transfer Acceleration?

Transfer Acceleration uses Amazon CloudFront's globally distributed edge locations to speed up uploads to S3. This feature helps to reduce latency and significantly improve upload speeds, making it especially beneficial for users located far from the S3 bucket's region.

Transfer Acceleration works by routing traffic through the nearest edge location, reducing latency and improving upload speeds. You can use Transfer Acceleration when you need to upload large files quickly from remote locations, or when you have users distributed globally. However, it's important to note that Transfer Acceleration comes at an additional cost. The pricing depends on the data transfer amount, with higher costs for longer distances between the client and the bucket. It’s best to use this feature when faster upload speeds justify the extra cost, particularly for time-sensitive or large-scale uploads.

From Concept to Execution

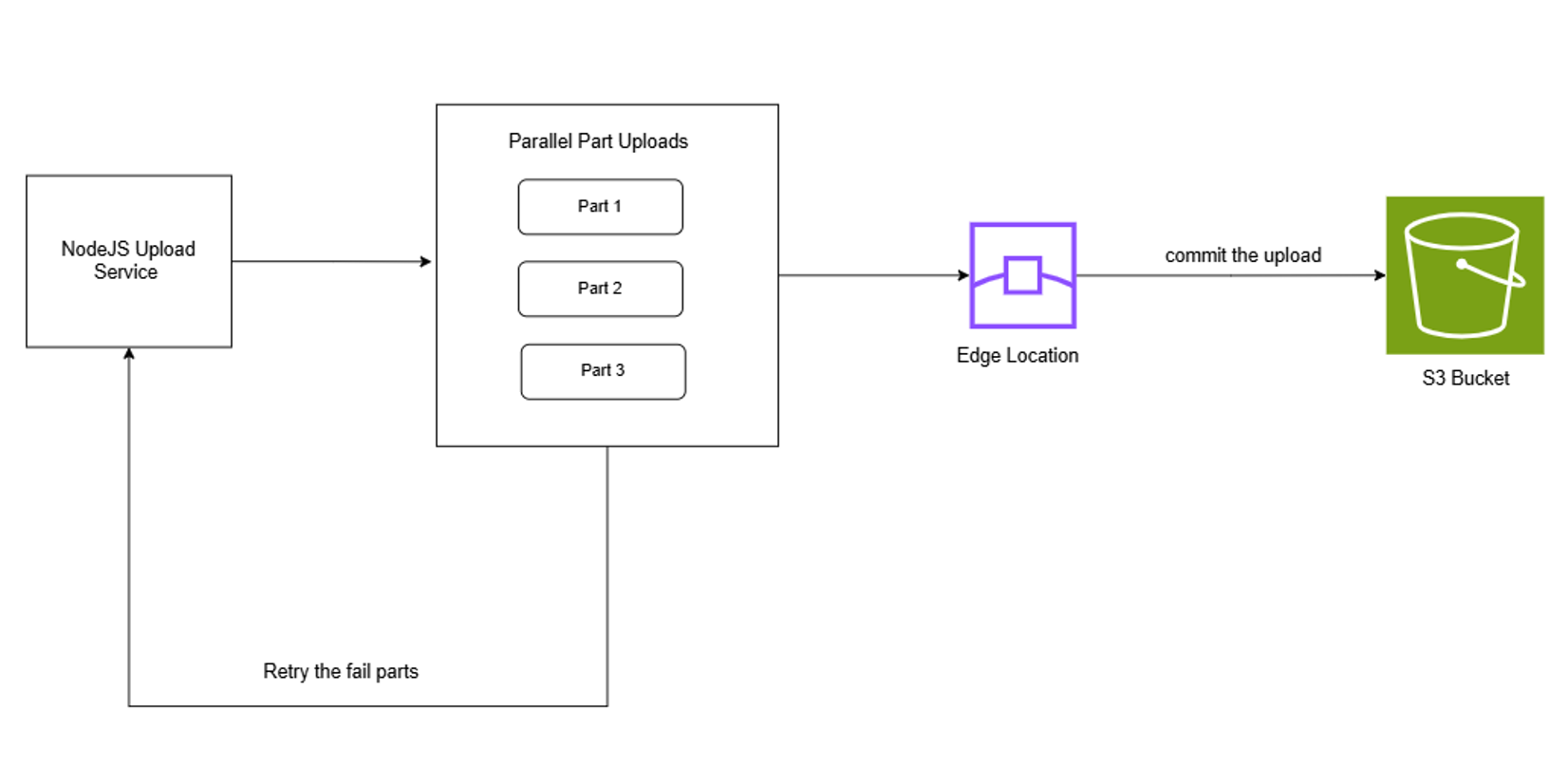

In this post, I’ll show you how to create a Node.js service that makes it easy to upload large files. We’ll use multipart upload, add an option for Transfer Acceleration, and include a retry feature to handle any parts that fail.

Setting Up

First, create a folder named S3-Upload-Service and install the Node.js AWS SDK, if it isn't already installed

mkdir S3-Upload-Service

cd S3-Upload-Service

npm init

npm install @aws-sdk/client-s3The AWS SDK is used to interact with AWS services and make API calls from your application. However, it requires proper configuration, including providing credentials, to authenticate and grant access to AWS resources.

To configure the credentials, you simply need to create an IAM user and obtain the access and secret keys. These keys will be used by the SDK when making API calls. Keep in mind that the SDK's access is limited by the permissions assigned to the IAM user.

Now that our environment is set up, we can begin implementing the code logic. Let's start by creating a file named index.js and adding the following code:

// index.js

const {

S3Client,

CreateMultipartUploadCommand,

UploadPartCommand,

CompleteMultipartUploadCommand,

} = require("@aws-sdk/client-s3");

const fs = require("fs");

const path = require("path");

class S3MultipartUploader {

constructor(region = "us-east-1", accessKeyId, secretAccessKey) {

this.s3Client = new S3Client({

credentials: {

accessKeyId,

secretAccessKey,

},

region,

});

}

// this function will contain the upload logic

async uploadFile(filePath, bucketName) {}

}The code above defines a class designed to manage file uploads to S3. By creating an instance of this class, you can call the uploadFile function, which will handle the upload logic. The configuration for the AWS SDK is managed in the constructor, where essential details such as region, accessKeyId, and secretAccessKey are provided.

Now it’s time for the best part: implementing the uploadFile function

async uploadFile(filePath, bucketName) {

try{

// File details

const fileSize = fs.statSync(filePath).size;

const fileName = path.basename(filePath);

// Multipart upload configuration

let partSize = 5 * 1024 * 1024; // 5 MB per part

const numberOfParts = Math.ceil(fileSize / partSize);

// Initiate multipart upload

// Upload parts

// Wait for all parts to upload

// Sort parts to ensure correct order

// Complete multipart upload

}catch (error) {

console.error("Error during multipart upload:", error);

throw error;

}

}This code determines the file size and name, configures each upload part to be 5 MB (the min size file need to be 5M), and calculates the number of parts needed.

Next, we will go through the remaining steps outlined in the code snippet using comments, explaining each one in detail starting by the Initiate multipart upload.

// Initiate multipart upload

const multipartUpload = await this.s3Client.send(

new CreateMultipartUploadCommand({

Bucket: bucketName,

Key: fileName,

})

);

console.log(`Multipart upload initiated for ${fileName}`);

CreateMultipartUploadCommand is used to initiate a multipart upload to an S3 bucket.

It starts the upload process and returns an UploadId needed for uploading parts.

This command is useful for efficiently uploading large files in smaller parts.

It helps in resuming uploads and managing file uploads in parallel that's why we will use it in the next steps.

// Upload parts

const uploadPromises = [];

const uploadedParts = [];

for (let partNumber = 1; partNumber <= numberOfParts; partNumber++) {

const start = (partNumber - 1) * partSize;

const end = Math.min(start + partSize, fileSize);

partSize = end - start;

const uploadPromise = new Promise((resolve, reject) => {

const readStream = fs.createReadStream(filePath, {

start,

end: end - 1,

});

this.s3Client

.send(

new UploadPartCommand({

Bucket: bucketName,

Key: fileName,

PartNumber: partNumber,

UploadId: multipartUpload.UploadId,

Body: readStream,

})

)

.then((uploadPartResult) => {

uploadedParts.push({

ETag: uploadPartResult.ETag,

PartNumber: partNumber,

});

resolve();

})

.catch(reject);

});

uploadPromises.push(uploadPromise);

}

The code above divides the file into parts, each of size 5MB, using the fs.createReadStream function, a built-in Node.js method and creates a Promise for uploading a part of a file to S3 using the UploadPartCommand.

A readStream is created using the fs.createReadStream function, which reads a specific range of the file defined by start and end values.

The UploadPartCommand is then sent to S3 with the Bucket name, file name (Key), PartNumber, UploadId, and the Body (which is the read stream). Once the part is uploaded, its ETag and PartNumber are pushed into the uploadedParts array to track the upload status.

The uploadPromise is added to an array of promises uploadPromises to manage multiple part uploads concurrently.

// Wait for all parts to upload

await Promise.all(uploadPromises);

// Sort parts to ensure correct order

uploadedParts.sort((a, b) => a.PartNumber - b.PartNumber);After all parts are uploaded, we need to sort them because the upload process is parallel, and any part may finish before another. The sorted parts will then be used to complete the multipart upload process in the next step.

// Complete multipart upload

const completeMultipartUploadResult = await this.s3Client.send(

new CompleteMultipartUploadCommand({

Bucket: bucketName,

Key: fileName,

UploadId: multipartUpload.UploadId,

MultipartUpload: { Parts: uploadedParts },

})

);

console.log(

"File uploaded successfully:",

completeMultipartUploadResult.Location

);

return completeMultipartUploadResult;In this step, we inform S3 that all parts have been uploaded, so it can complete the multipart upload process by providing the ETag and PartNumber for each uploaded part.

At this point, we are good, but we need to add a retry mechanism that will be executed if any part fails. We begin by adding a new function called retry inside the class.

async retry(fn, args, retryCount = 0) {

try {

return await fn(...args);

} catch (error) {

if (retryCount < 3) {

console.log(`Attempt ${retryCount + 1} failed. Retrying...`);

await new Promise(resolve => setTimeout(resolve, 1000 * Math.pow(2, retryCount))); // Exponential backoff

return this.retry(fn, args, retryCount + 1);

}

throw error;

}

}The retry function will attempt to upload a file up to three times if the upload fails, using the Exponential Backoff technique. After implementing this function, we will need to update the upload parts step accordingly.

// Upload parts

const uploadPromises = [];

const uploadedParts = [];

for (let partNumber = 1; partNumber <= numberOfParts; partNumber++) {

const start = (partNumber - 1) * partSize;

const end = Math.min(start + partSize, fileSize);

partSize = end - start;

const uploadPromise = new Promise((resolve, reject) => {

const readStream = fs.createReadStream(filePath, {

start,

end: end - 1,

});

// Upload part with retry mechanism

this.retry(this.s3Client.send.bind(this.s3Client), [

new UploadPartCommand({

Bucket: bucketName,

Key: fileName,

PartNumber: partNumber,

UploadId: multipartUpload.UploadId,

Body: readStream,

}),

])

.then((uploadPartResult) => {

uploadedParts.push({

ETag: uploadPartResult.ETag,

PartNumber: partNumber,

});

resolve();

})

.catch(reject);

});

uploadPromises.push(uploadPromise);

}With this, we can say that our uploadFile function supports the retry mechanism if any part fails. The last thing we need to add is the ability to use S3 Transfer Acceleration.

To use S3 Transfer Acceleration, we need to update the constructor since this functionality is optional. A variable must be passed to indicate whether the class should enable it. Additionally, you need to activate the transfer acceleration feature for your bucket in the AWS Management Console. Without enabling this feature, it will not work.

constructor(region = 'us-east-1', options = {}) {

const {

accessKeyId,

secretAccessKey,

useTransferAcceleration

} = options;

const clientConfig = {

credentials: {

accessKeyId: accessKeyId,

secretAccessKey: secretAccessKey

},

region: region,

useAccelerateEndpoint: useTransferAcceleration,

};

this.s3Client = new S3Client(clientConfig);

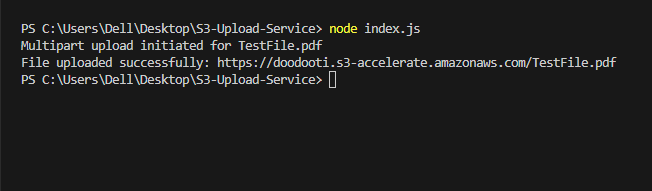

}After all this effort, we can confidently say that all the steps are complete, and our multipart upload is ready to use. Now, let's test it and observe the results!

async function main() {

const options = {

accessKeyId: "Your accessKeyId",

secretAccessKey: "Your secretAccessKey",

useTransferAcceleration: true,

};

// Create uploader with transfer acceleration

const uploader = new S3MultipartUploader("eu-central-1", options);

await uploader.uploadFile(

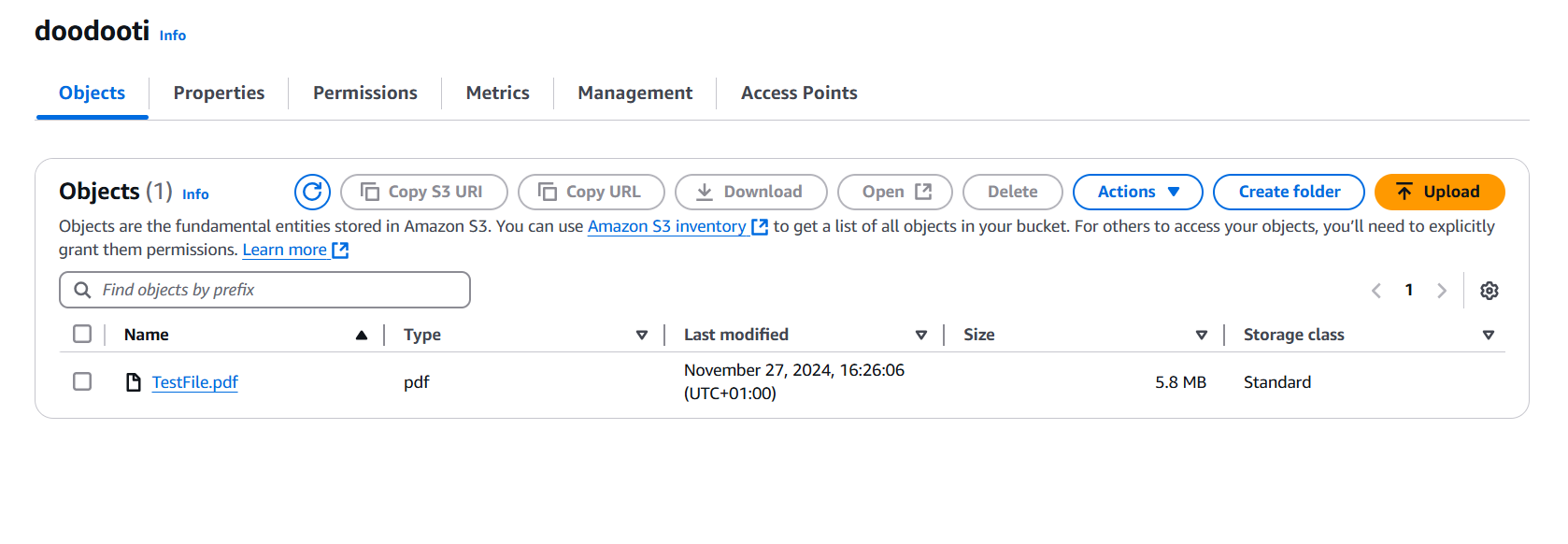

"TestFile.pdf", // Local file path with a size of 5.8 MB

"doodooti" // S3 Bucket Name

);

}

main();

It works 💥

Conclusion

Using AWS S3's multipart upload and transfer acceleration features can greatly enhance the efficiency and speed of your file uploads. This example demonstrates how to implement these features in a Node.js application, ensuring your uploads are fast and reliable.

Code : https://github.com/ridhamz/nodejs-s3-files-uploads